|

clearString neatComponents

|

|

clearString neatComponents Using LLM Chat Completion |

| ||

| Application Development |  |

Using LLM Chat Completion | ||

|  |

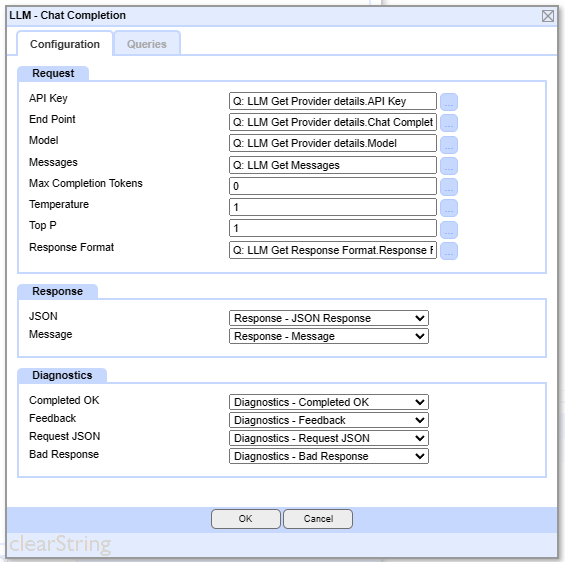

The Chat Completion Action handles the core communication with the AI Large language Model engine.

To make best use of it you need to provide the logic and extra actions and components to prepare the data to be sent to the AI engine, and to work with what is returned from it.

There is no single best way to do this, but this document shows some example techniques that can be used.

This example is taken from one of the demonstration sites, so you can take a copy of this and explore it directly.

Example Configuration

The Chat Completion action is configured within the Record Change event of a Table, "LLM Question"

In the Request section we are taking most of the values from Queries - rather then entering values directly - primarily to give flexibility over what they are.

AI LLM Provider

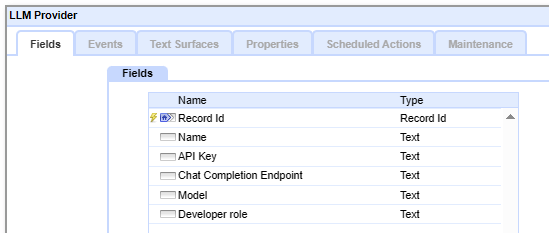

The API Key, End Point and Model work as a set to define which AI Chat Completion API will be used, where it is, and the key needed to use it. These are defined in another Table, "LLM Provider", and a Recordlink field in "LLM Question" links across to the record containing the details to be used for this particular request.

The field structure for the "LLM Provider" Table is quite simple:

The one field that may be less obvious is the 'developer role'. This is the role name used for the prompt instructions defining what the AI engine should do. This varies by provider and model, and is likely to be either 'developer' or 'system'. The provider's documentation will detail which should be used. OpenAI used to use 'system' but now use 'developer'.

Messages

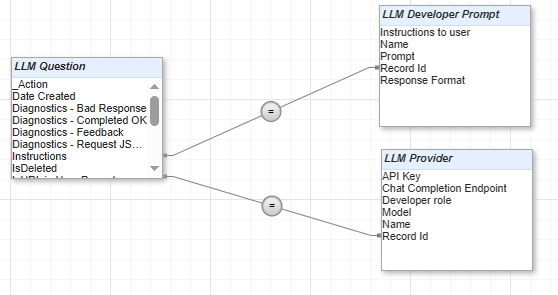

Back in the Chat Completion action in the "LLM Question" table, the core field is the "Messages". This requires a Query, that contains two records, one containing the developer prompt, and one containing the user's question.

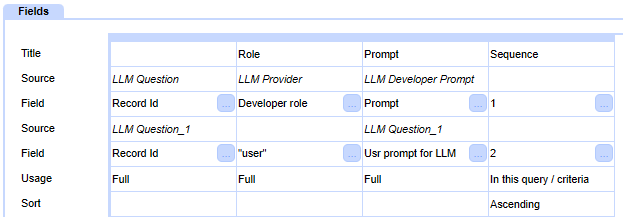

This is defined in the Query "Q: LLM Get Messages"

This query contains two Source Group.

The first returns the Developer Prompt:

It consists of the "LLM Question" Table, so we can specify criteria to restrict to the record in "LLM Question" we are working with, and then joins across to two other table: "LLM Developer Prompt" that contains the prompt, and "LLM Provider" which is just used to give is the role name ("system" or "developer")

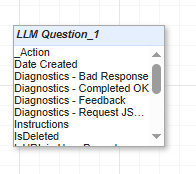

We then have a second Source Group, which just contains a second instance of "LLM Question" (The query adds the suffix _1 to disambiguate it from "LLM Question" in the first Source Group)

We then specify the fields we are needing:

Note we have added a Sequence expression, with integers of 1 and 2, and sort by this, to ensure we present the Developer Prompt before the User one.

The Role field pulls in the value from LLM Provider for the developer record, but it is always "user" for the user one, so that is defined explicitly here.

In the Chat Completion action, we select this Query, and map the fields from the Query for their uses as Role and Content (We don't use Name here, that's for use when you need to distinguish between different users within a single chat conversation).

Response Format

The Chat Completion action has the option to provide a Response Format. Without a Response Format, the response is given as human-readable text without any particular structure.

However if you want to manipulate the response, for example if it is a set of items you want to split out into separate records in a table, then you can specify the format here. For this, you give a JSON Schema, which details the field name and relationships between them.

The results then come back in a JSON format, and this can be passed into a Data Import component to extract the data and store it in records and fields for subsequent use.

The above example JSON Schema is for a task that involves reading in the transcript of a meeting, and providing information about it, including a list of the action points arising.

It defines a 'meeting' object, within which there are fields of "title", "summary", "minutes" and "date"

There is an array of "actions", each of which has fields of "action_name", "action_person", "action_deadline" and "action_date". We don't specify how many of these there will be, that we be determined from the information provided by the user.

Once the the LLM Chat Completion action has been executed, the response is stored in the "Response - Message" large text field

If it is plain text (ie no Response Format was specified, it has be displayed to the user as-is.

If there was a Response Format specified, then the response will be in JSON format, and it will need to extracted.

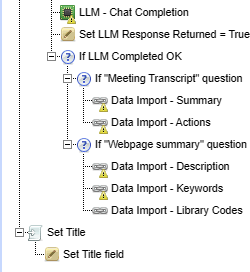

This is managed in the Record Change event of "LLM Question".

This starts by using some conditions to ensure the Data Import is only attempted if the results came back ok, and for the correct question type.

![]()

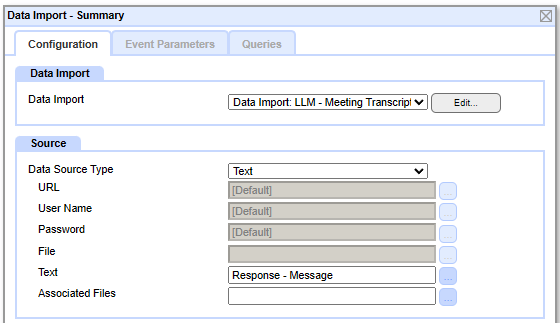

Then the Data Import components are called.

Note: It would be possible to combine all the extraction into a single Data Import component, but for demonstration purposes it is easier to see what is happening if it is split into two, one for the initial fields, and one for the repeating array.

The Data Import action simply calls a Data Import component, and passes in the field with the JSON - "Response - Message"

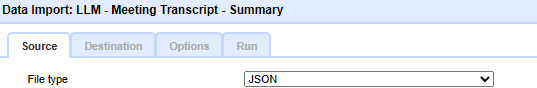

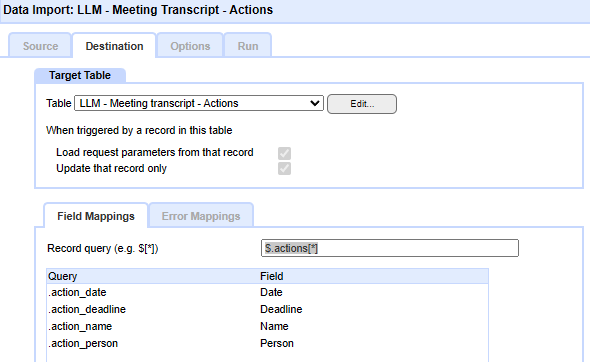

Then the Data Import component is configured:

On the Source tab it is set read JSON (as opposed to CSV etc)

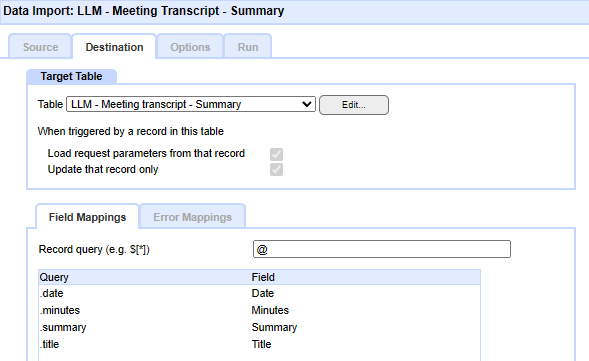

and the Destination tab contains the configuration to split out the various fields.

For the initial fields, that aren't in an array, the Record query is an @

The field names used in the JSON, shown here in the Query column, and all prefixed with a dot/period.

For the other Data Import component, that extracts the fields from the repeating array, the Record query is different: $.actions[*]

Supporting user actions

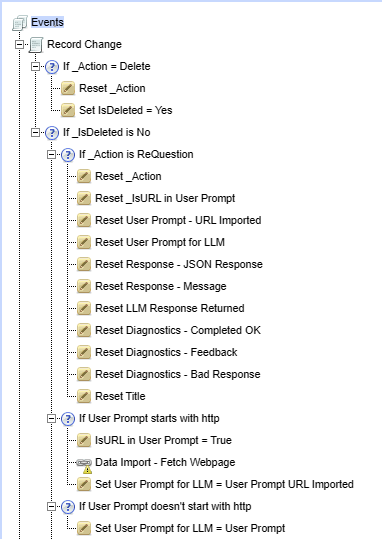

In addition to processing a user's question, the event tree in "LLM Question" supports several associated tasks:

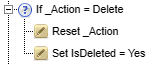

Deleting a question

Each time a user asks a question a new record is created in the table, and displayed to the user in the list of "Previous questions".

However sometimes a user will not want that to be shown, so the user is provided with a dialog in which they can choose to delete one.

For audit purposes, the record isn't actually deleted, but a checkbox field "IsDeleted" is set to Yes,

Then the list of displayed questions contains a criteria to filter these out from being shown.

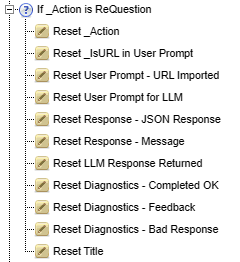

Asking again

When the users sees the results of their question, they may wish to ask it again. This might be to:

- Ask the same question of the same AI model again - since each answer attempt will be slightly different.

- Ask the same question of a different AI model

- Ask a similar question - where editing the first question is easier that starting over.

In all of these cases the user is presented with the existing question record, so they can edit the question and/or change the AI model to be used. When the save this, the 'Submit" button is actually liked to the "Save As" function, meaning the original record is left untouched, and the question is stored in a new record. This also sets the _Action field to, in this case "ReQuestion", and the event condition looks for this, and cleans up any results fields copied over from the original question before proceeding with running the Chat Completion action.

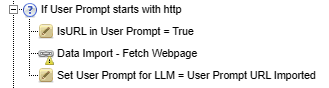

Reading webpages

Most Large Language Models when accessed via their Chat Completion APIs cannot browse the internet to read webpages, so if the user gives an URL to be used, the AI engine will only see the URL itself, not the actual page, and will likely try to guess what the page contains, which is of little merit.

To work around this, in the "LLM Question" event tree, if the user starts their question with "http" then it assume this is the start of an URL , and attempts to fetch the contents of the webpage itself, and then gives that to the Chat Completion action instead of the URL.

The core fetching of the webpage is performed by a Data Import component

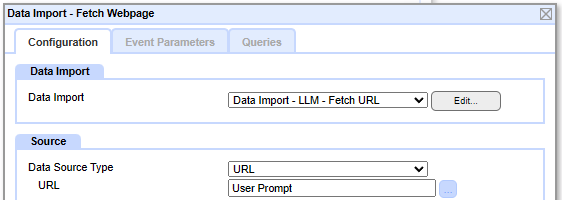

This is called by a Data Import action:

This specifies the Data Import Component, and informs it that it should treat the contents of the User Prompt field as an URL.

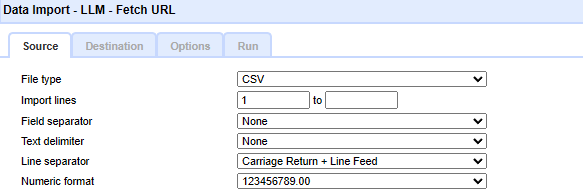

The Data Import Component knows how to handle CSV, JSON or XML files, but doesn't have an explicit option for general text files such as HTML files.

To solve this, we simply tell it to treat it as a CSV file, without any comma or line separators:

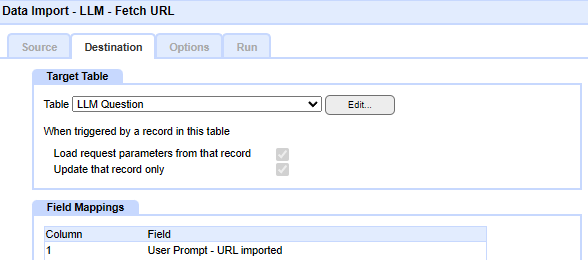

Then on the Destination tab, the extracted text is mapped to the field "User Prompt - URL imported" in the original "LLM Question" table.

As it has been triggered from that same table, it updates the field in the record that called it, rather than creating a new record.

The complete event tree

Putting all this together gives an event tree:

AI Integration In this section |

AI Integration In this section: |

Copyright © 2026 Enstar LLC All rights reserved |