|

clearString neatComponents

|

|

clearString neatComponents LLM Chat Completion Event Action |

| ||

| Application Development |  |

LLM Chat Completion Event Action | ||

|  |

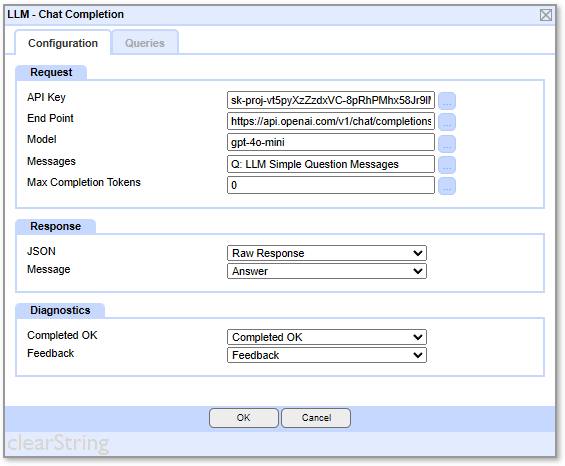

The LLM Chat Completion action sends a request to an AI LLM provider and stores its response.

Note: The Queries tab can be used to define queries to be used by the API Key, End Point, Model and Max Completion Tokens fields. (The Message field has its own dialog for defining the Query it uses, as this requires multiple fields to be mapped)

Configuration Tab:

Request

This section defines all the information needed for the request.

API Key

This is the API Key issued to you by the AI LLM provider (eg OpenAI, DeepSeek etc)

End Point

This is the URL of the service provided by the AI LLM provider

Example: For OpenAI this is: https://api.openai.com/v1/chat/completions

Model

Providers often offer a number of different models, each with a different set of capabilities and prices. Enter the name of the model you want to use.

Messages

The action needs to send over to the AI LLM system a set of messages. These tell it what it being asked to do, and any input from a user.

.png)

This consists of a set of records returned by a query. Each record contains two or three fields:

- Role (required)

- Content (required)

- Name (optional)

Typically the first record will have a Role of "developer" and have as its Content some instructions defining what the LLM should do, often known as its prompt engineering.

In a simple scenario this would be followed by another record with a Role of "User" which contains as its Content some text provided by a user which it will process, as instructed by the Content in the earlier developer record.

The sequence of the records is important.

In a more advanced scenario, there may be more records. For example in a 'chat' scenario where there is a back-and-forth conversation between the user and the AI, each stage of the conversation must be recorded and then included as a separate record in this query. In these cases the response from the AI should be presented with a Role of "Assistant".

The optional "Name" field can be used to distinguish between multiple users in a more complex interaction.

Max Completion Tokens

The total number of tokens the AI LLM system should consume in completing the request.

Optional (leave at 0 to no specify a limit)

Response

This section takes the response that comes back from the AI LLM API and stores it in fields in the table.

JSON

This is the raw JSON response. This contains a number of different fields, including the core 'message' response, but also a set of meta-data such as the number of token used, which can be useful for billing etc.

Store this in a Large Text field

Message

This is the text response to the request. Typically this is simple text, but depending on the detail of the prompt engineering it may itself be a JSON formatted string, which can be interpreted using the Data Import component.

Diagnostics

This section gives the standard event action diagnostic feedback.

Completed OK

Store in a Checkbox field

Feedback

Store in a Text field

Note that the JSON field in the Response section may also give further detailed diagnostics depending on the situation.

AI Integration In this section |

AI Integration In this section: |

Copyright © 2026 Enstar LLC All rights reserved |